Over the past year, dozens of AI-powered audit tools have entered the market. Most promise faster audits, smarter testing, and better risk detection.

Yet many auditors who try these tools come away unconvinced.

This is not because AI lacks potential in audit.

It is because many tools are built on a flawed assumption about how audits actually work.

Audit Is a Process, Not a Feature

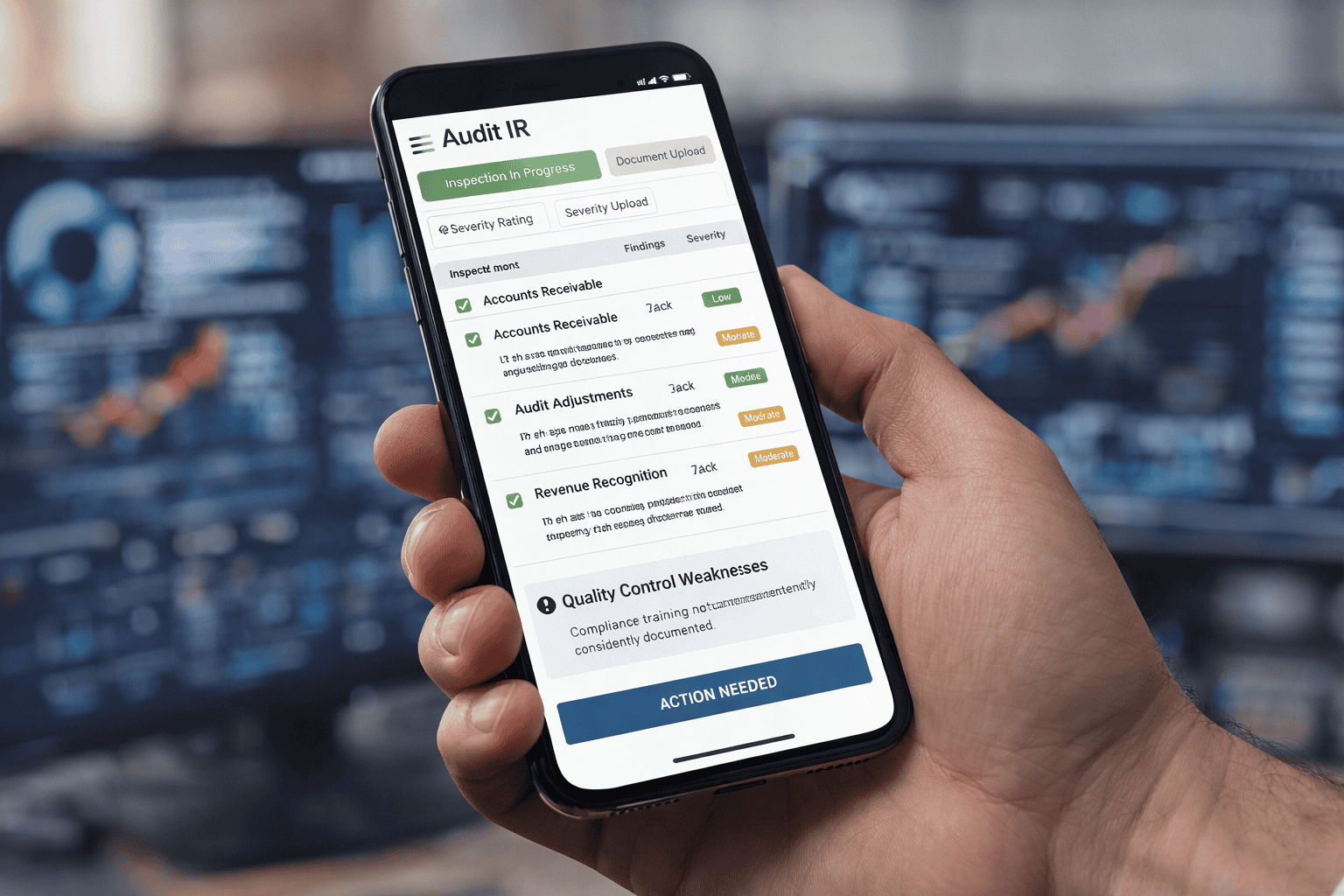

Many AI audit tools are designed as point solutions. A model that flags anomalies. A dashboard that scores risk. A chatbot that answers accounting questions.

These features may look impressive in isolation, but audit is not performed in isolated steps.

Audit quality depends on:

- How findings connect across procedures

- How evidence accumulates over time

- How conclusions are supported and documented

- How decisions are made under professional standards

A tool that optimizes one step while ignoring the rest rarely improves the outcome.

Outputs Matter More Than Predictions

In audit, a prediction without traceability is useless.

Auditors do not need probability scores.

They need clear links between data, procedures, evidence, and conclusions.

Many AI tools focus on detection but stop there. They highlight anomalies without explaining how those findings should be validated, documented, or followed up.

This shifts work rather than removing it.

True value comes when AI contributes to audit-ready outputs, not just insights.

Intelligence Without Context Creates Noise

Audit data is noisy by nature. Unusual does not mean risky. Complex does not mean incorrect.

Generic AI models trained to spot outliers often produce more alerts than teams can reasonably investigate.

Without audit-specific context, AI becomes a distraction.

Effective audit intelligence requires:

- Understanding of audit objectives

- Alignment with assertion-level risks

- Awareness of what constitutes sufficient evidence

Without this grounding, “smart” tools generate busywork.

The Difference Between Assistance and Execution

The most meaningful distinction among AI audit tools is not how advanced the model is.

It is whether the system assists thinking or participates in execution.

Assistance tools support auditors from the outside.

Execution tools operate within the audit flow, shaping how work is actually done.

The latter is harder to build, slower to adopt, and far more valuable.

Why This Is Difficult, but Necessary

Embedding AI into audit execution requires deep domain understanding, careful system design, and respect for professional boundaries.

It cannot be solved with a generic AI layer added on top of legacy workflows.

But without this shift, AI in audit will remain superficial: impressive in demos, marginal in practice.

What to Look for Going Forward

As AI adoption in audit continues, the most important question is not “Does this tool use AI?”

It is:

Does it reduce blind spots?

Does it improve evidence quality?

Does it strengthen, rather than bypass, professional judgment?

Tools that cannot answer these questions clearly will struggle to deliver lasting value.